I am not super experienced in 3d design or rendering, but I believe something similar to this happens.

An object will have a model, this model will have a viewport/perspective based on where you are viewing it from.

Based on this, rasterization will occur converting the image into pixles (a rastered image).

All of this should be handled in the opengl pipeline or a similar 3d rendering process.

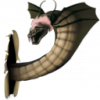

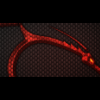

And yes, that is the technique that I was thinking of. Here is an image similar to the one I was thinking of that demonstrates the technique running.

As you see, the image starts larger, the red line represents the least cost path for that iteration (The pixels that are least noticeable) and then the pixels under the red line are removed.